Continual Aggregate Hub architecture and restaurant

The continual aggregate hub (CAH) have these core (albeit high level) architectural elements:

- Component based and layered

- Process oriented

- Open standards

- Object oriented

- Test, re-run and simulate

- and last; it should behave as a restaurant

|

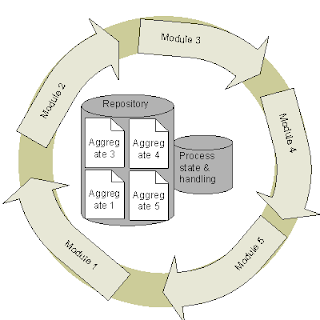

| CAH processing architecture |

Component based and layered

The main concern is to protect the business logic form the technical domain. Freedom to deploy! This is more or less obvious, but the solution should consist of small compact Modules with a clear functional purpose, embedded in a container that abstracts technical constraints form functional. This is roughly dependency injection and the Java container.

The components and the layers should be independent so that they can scale out.

The TaxInfo data store and cache is preferably some in-memory based architecture (aka. GigaSpace…), with relevant services around it. A Module will deploy service components into this architecture. These services peek (consume) or poke (produce) into the aggregates that the Model own. The services are used by humans or other systems. The Bounded Context of DDD spans vertically User Interface Architecture, TaxInfo and Processing Architecture.

The User Interface Architecture is a browser based GUI platform where the user can go from one page to another without knowing what system he is actually working with. We do not need any portal product, but some sets of common components, standards and a common security architecture.

Process oriented

The Modules should cooperate by producing Aggregates. The architecture must continually produce Aggregates to facilitate simple consumption. This will enhance and make a base for parallel processing, sharding and linear scalability. It will also make the process more robust.

Manual and automated processes cooperate through process state and common Case understanding.

A functional domain consist of Modules for automated processing and Modules for human interaction. Human interaction is mainly querying or inspecting a set of data, or making manual change to data.

In between processing modules there is either a queue or some sort of buffer, or the Module may process at will.

Open standards

The solution we implement will live for many years (20-30?) so we must choose open standards we assume to live for some time. But also those architectural paradigms that will last longer than the standards themselves. This stack will never consist of one technology.

We must protect our investment in business logic (see components based), and we must protect the data we collect and store.

As of today we say Java, HTML, and XML (for the permanent data store).

Object oriented

Business logic within our domain is best represented in an object oriented manner. Any aggregate has a object oriented model and logic with basic behavior (a basic Module). Business logic can then perform on these aggregates and do higher level logic.

By having a 1:1 relationship between stored data and basic behavior (on the application layer), locking and IO will benefit.

Test, re-run and simulate

By having discrete processing steps with defined modules and aggregates, testing is enhanced.

All systems have errors and these often affect data that has been produced over some time. By having defined aggregates and a journal archive of all events (the Process state component), it is possible to just truncate some aggregates, and restart the fixed Modules.

Simulation is always on the wish-list of the business. Simulation modules can easily sit side-by-side the real ones, by produce to specific aggregates for the simulated result. Simulated aggregates will only be valid in a limited context and not affect the real process flow.

The Continual Aggregate Hub restaurant

Think of the CAH as a restaurant. Within the restaurant there are tables with customers who place orders, that are served by waiters, and kitchen with cooks. A table in the CAH is a collection of Aggregates, in our domain the “Tax Family”. (All tax families are independent and can be processed in parallel.) The waiters serve tables that has changes that need to be served. The change results in an order with the relevant aggregates and brings it forward to the kitchen. All orders are placed in a queue, (or order buffer of some sort) and the cook process orders at capacity. It is important that the cook does not run to the shop for every ingredient, but that the kitchen has the resources necessary for processing the order. So the kitchen is autonomous (then it can scale by having more cooks, or specialized cooks), but of course has a defined protocol (types of orders and known aggregates). When the dish (aggregate) is finished it is brought back to the table (it is stored).

So the main message is that the cook must not go to the shop while cooking. I think this is where many systems have the wrong approach; somewhere in the code the program does some search here or there. Don’t collect information as you calculate. Separate concerns; collect data, processing it and storing the result!

Continual data hub architecture and restaurant

Continual data hub architecture and restaurant by

Tormod Varhaugvik is licensed under a

Creative Commons Attribution-ShareAlike 4.0 International License.