Why is this?

Good Service Orientation should bring clear separation of concern, easier maintenance, less code, independent deploy and release cycles, more frequent releases, easier sourcing, and a higher degree of flexibility (among others). The idea of a bus (ESB) is good enough, but it does not relieve you from the real challenge; complexity and functional dependency. Where you previously had FTP files separating silos, they now must both be up and running. When things break you have 100.000 broken messages on the bus to clean up. These messages are a long way from home; they break out of context. Probably they are better understood within their domain.

The challenge is to find a design and migration strategy with lower maintenance cost in the long run. You should make things simpler and testable, by using DDD on your system portfolio.The intention with these integration patterns are good; the Aggregate and Canonical patterns promise encapsulation but often end up with handling complexity outside of its context. That leads to a tough maintenance situation.

Scenario

The initial stage where silos send and depend on information directly from each other:

|

| Silos supporting and depending on each other |

|

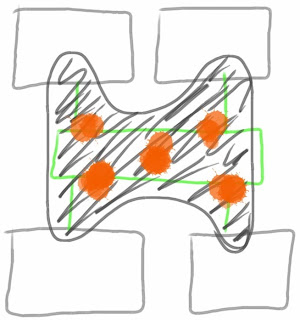

| An ESB tries to make things easier , but the dependency is still there |

|

| Black Octopus tentacles |

There is also another problem with this approach. Most tools (and actually their prescribed usage as taught in class) let the ESB product make adapters that go into the silo. Many silos have boundaries, like CICS, but others offer database connections so that adapters for the ESB actually glue into the implementation (of the silo). Now we are getting into en even more serious maintenance hell. Each silo has a maintenance cycle and organization supporting the complex systems it is. By not involving this organization and not letting this organization support the services they actually offer to the environment, you will have trouble. The organization must know what they silo is being used for. How else are they going to support SLA, or make sure that only consistent data leave the silo? This is illustrated as a black Octopus with tentacles into the systems having different parts of the organization tied up even closer.

|

| Context Bleeding |

It is a much better situation for those with the deep knowhow of the silo to construct and support the services and canonical messages they offer. The integration team should mostly be concerned with structure and not content.

The same can be said about business processes orchestrated outside of their domain; it may get into a "make minor" approach that does not enhance ease of maintenance. Too often there is high coupling between process state and domain state. (see Enterprise Wide Business Process Handling)Better approach

|

| Dark green ACL maintained by each silo |

This is not complete without emphasizing the importance of functional decomposition between the silos, so that they have a clearer objective in the system portfolio. But this takes time, and often you need an in-between solution. ESB-tools are nice for such ad-hoc, but don't let it be your new legacy. Strive for granular business level services, so that you limit the "chatting" between systems and make usage more understandable (but this standardization is more a business challenge, than an IT challenge). Too many ESB's end up like CRUD repositories; illustrating only an open bleeding wound of the silo.

The objective is: Low Coupling - High Cohesion. Software design big or small - the same rules apply.

Dont let the Enterprise Service Bus lead to Context Bleeding by Tormod Varhaugvik is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.